I2SB: Image-to-Image Schrödinger Bridge

International Conference on Machine Learning (ICML), 2023

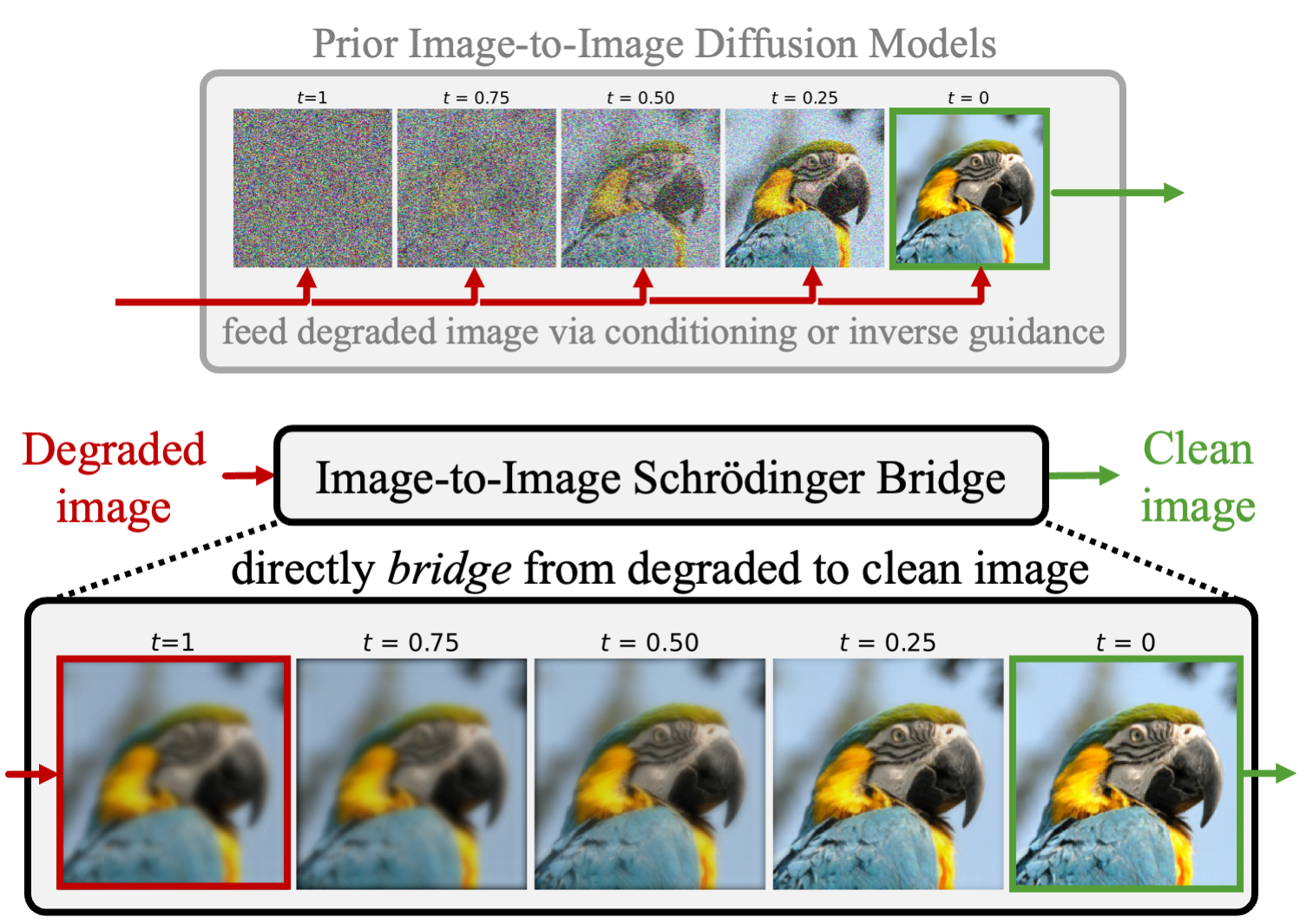

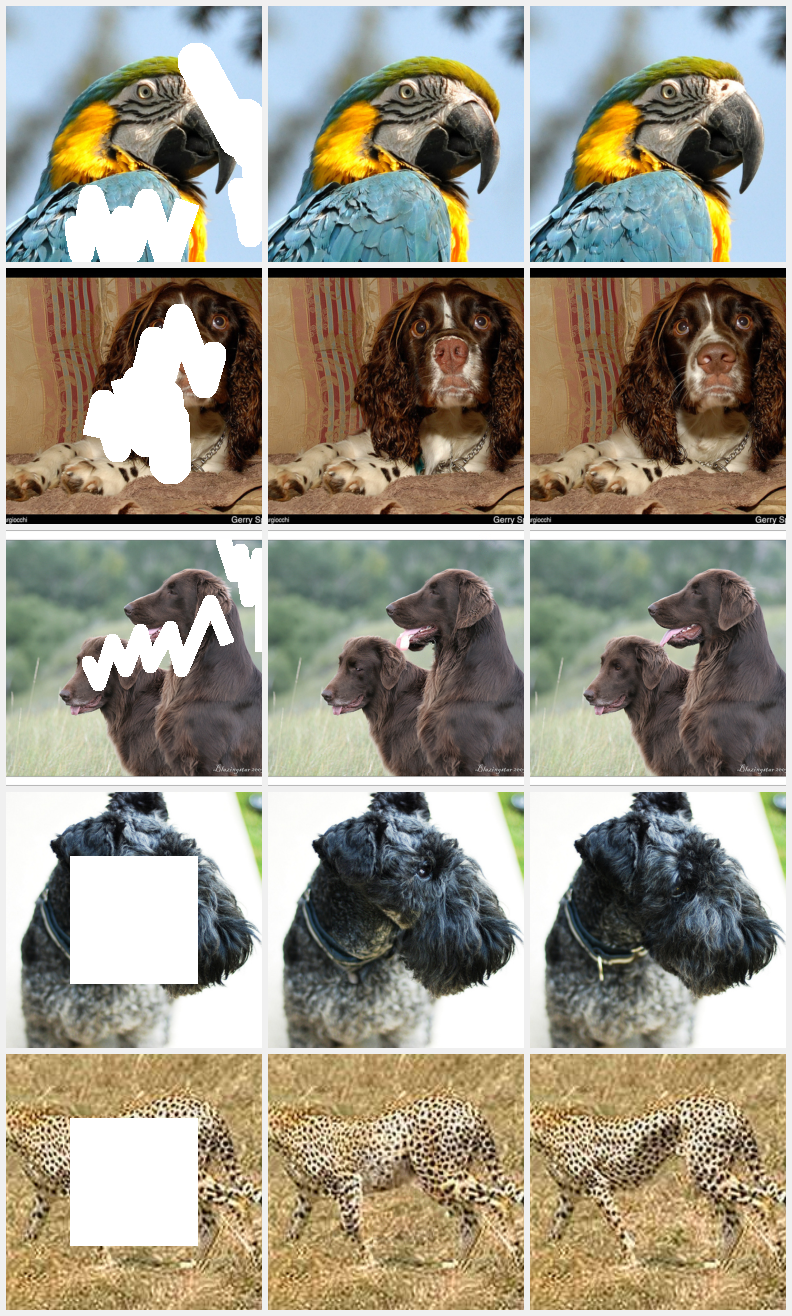

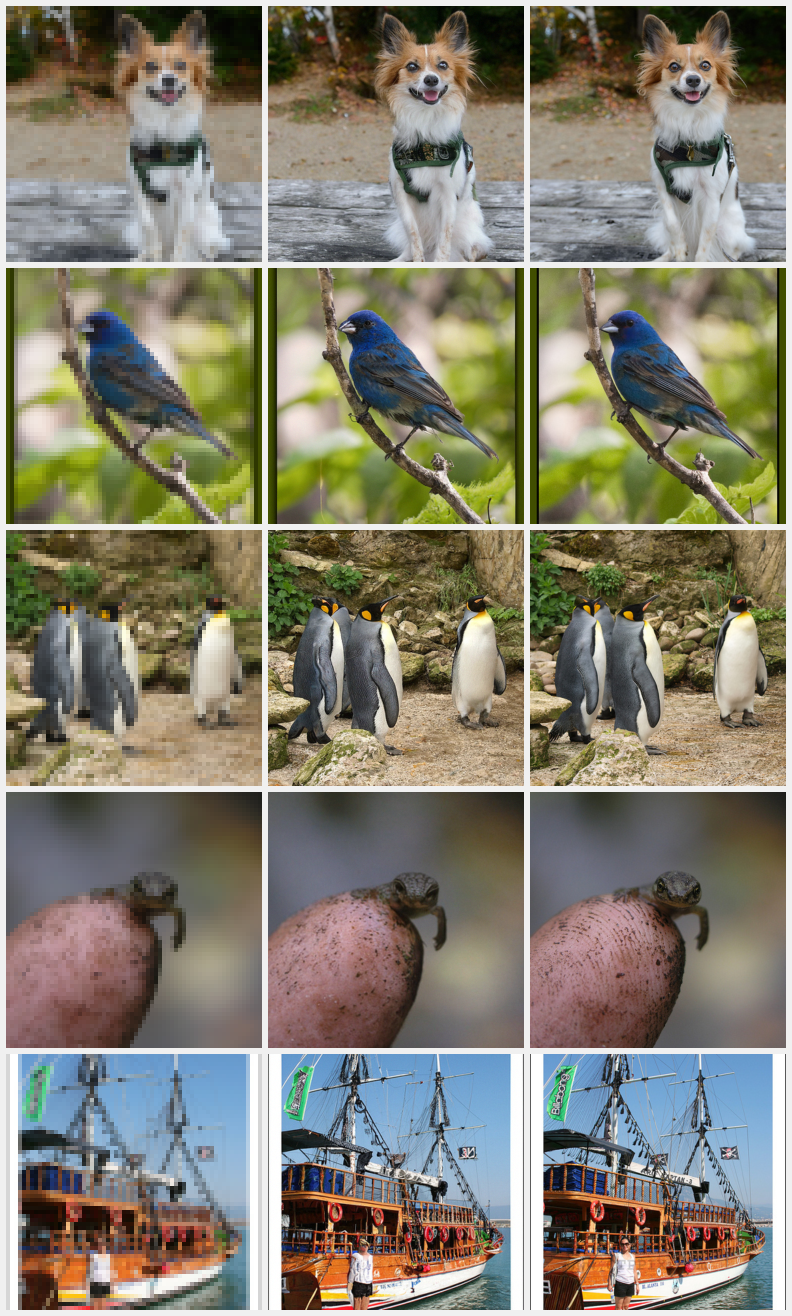

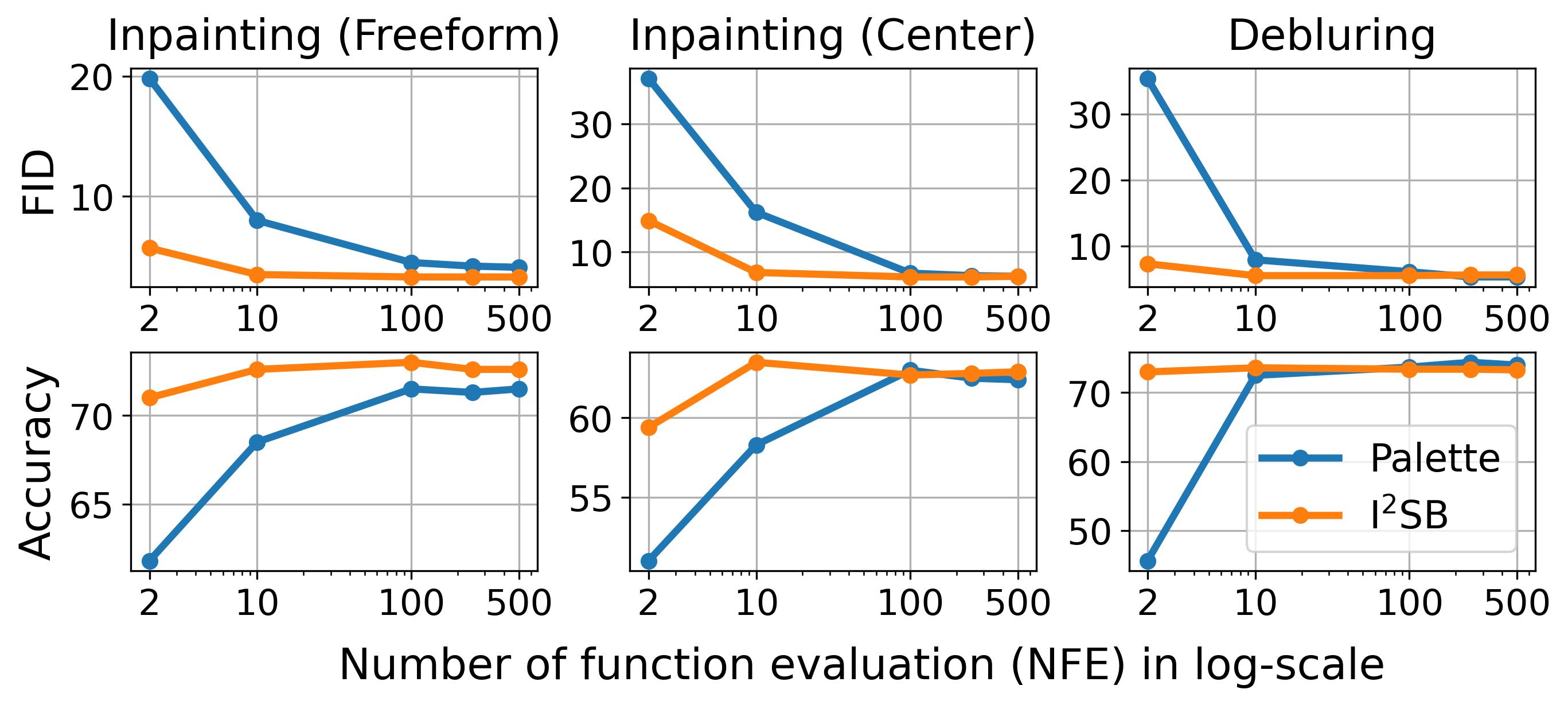

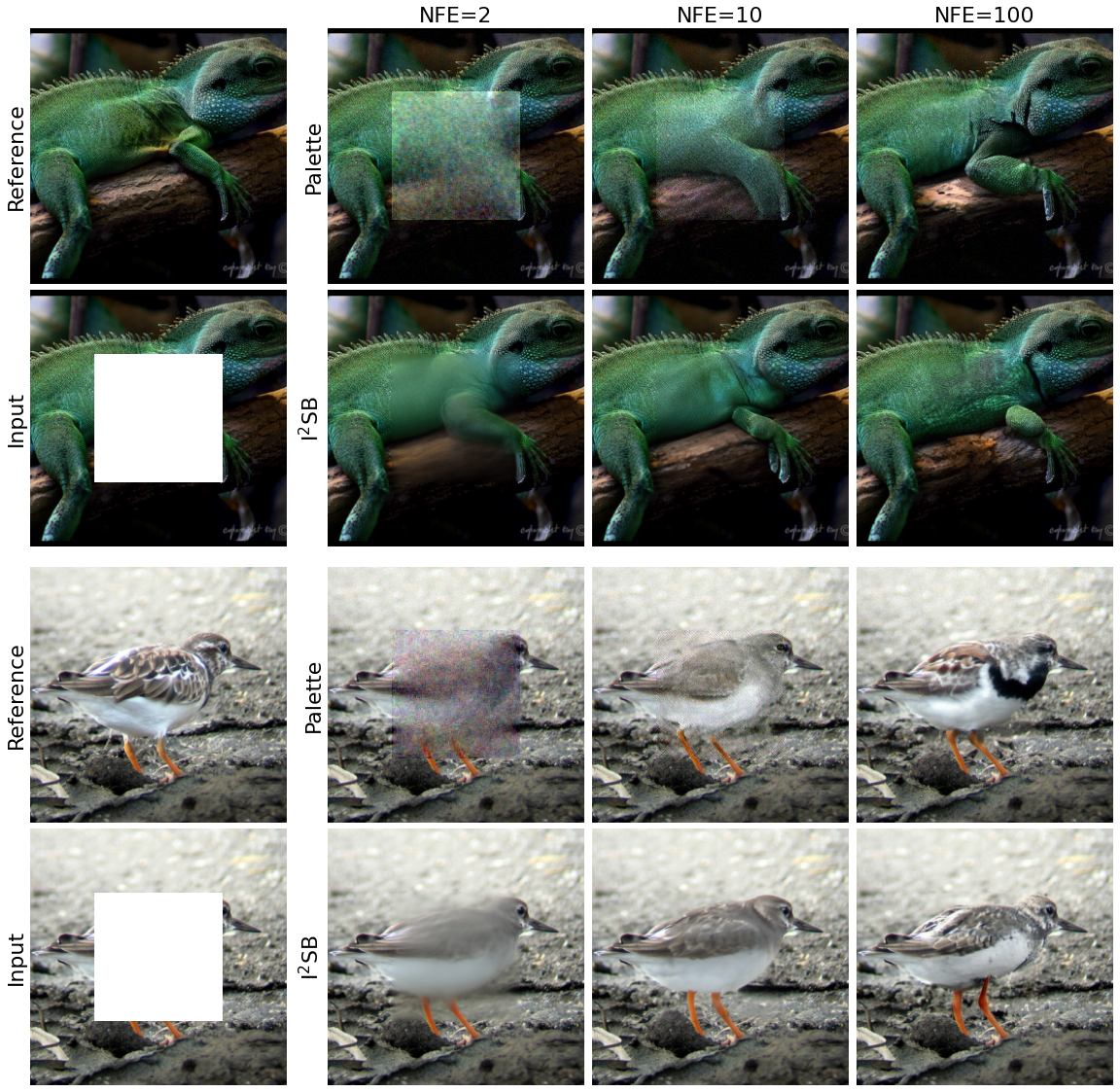

I2SB is a new class of conditional diffusion models that directly construct diffusion bridges between two given distributions. It yields interpretable generation, enjoys better sampling efficiency, and sets new records on many image restoration tasks.